CUDA Error Handling: A Definitive Guide

There are right and wrong ways.

Considering CUDA is almost 20 years old, there is a surprising absence of consensus on how to check for and handle errors, even within NVIDIA’s own sample code. There’s universal recognition that you should check error codes, but the developer education materials are not very prescriptive as to how.

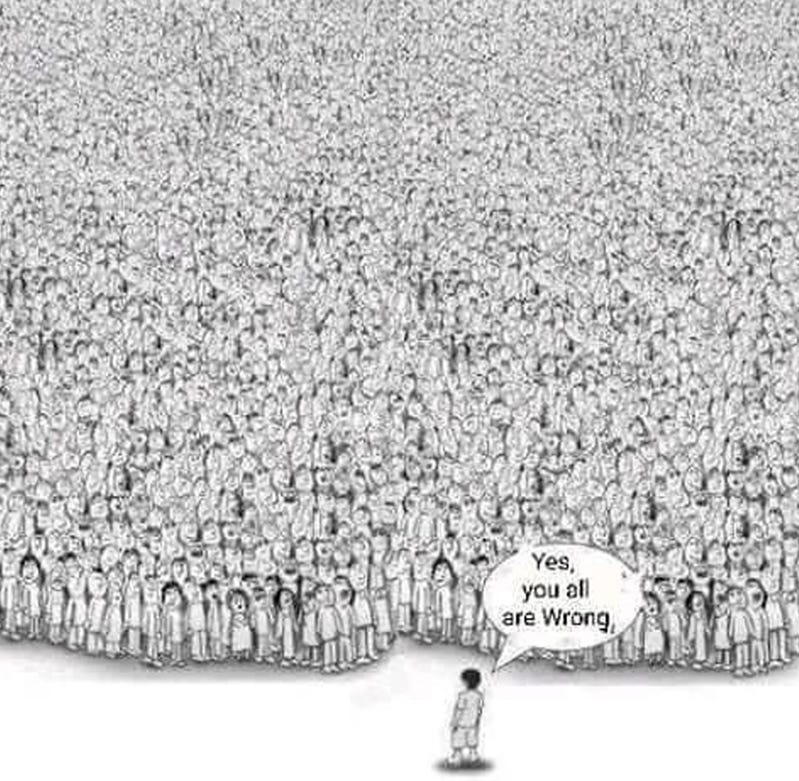

Now.. I am here to tell you that everyone is doing this wrong.

The CUDA Handbook has settled on a fairly rigid set of rules around error handling, which offer the best combination of conciseness, correct error handling, and portability to HIP without extra build steps:

Check every error code.

Use

gotofor error handling and cleanup. (see “goto statement considered occasionally useful”)Do not check error codes for API calls that free resources.

A template for a function that allocates and frees resources looks something like this:

cudaError_t

allocateAndUseEvent( size_t N, … )

{

cudaError_t status;

cudaEvent_t event = 0; // IMPORTANT - code not correct w/o initialization

cuda(EventCreate( &event ) );

…

Error:

cudaEventDestroy( event ); // note - error code not checked!

}If the function allocates resources on behalf of its caller, the code immediately above the Error label would free resources as needed, then return cudaSuccess.

Your Mileage May Vary™. The CUDA Handbook macros impose some policy on their users: the status variable and Error label must be defined, for example. You may wish to implement different policies (the SDK samples typically print an error and exit the process, rather than propagating the error to a caller), give your error label a different name (on the off chance you intermix goto-based error handling for different API families in the same functions), or make other changes based on your application’s needs.

For conciseness, our macros prepend ‘cuda’ to the front of the function on behalf of the caller, so functions such as the above one actually cannot be called with our default error handling macros. That said, it is uncommon to write our own functions that propagate native CUDA errors – more typically, when an error is encountered, we transmute it into our own error handling regime before propagating it. For those rare cases, I left the old CUDART_CHECK macro in place in chError.h.

Under no circumstances, however, should you incorporate cudaGetLastError() into your daily error-checking routine. It is just as amenable to invocation via the error handling macros as other CUDA functions, and almost never needs to be called explicitly. The only circumstance when cudaGetLastError() must be called is when a kernel launch may have been misconfigured. In other cases, such as to detect when a running kernel has encountered a memory fault, you can rely on functions such as cudaDeviceSynchronize() . For more context, take a look at my previous article on asychronous error handling.

If all you wanted was a page-long description of the CUDA Handbook’s philosophy on error handling, you can stop reading now. The rest of the article gives an overview of the state of affairs and, for those building applications portable to AMD’s ROCm platform, an honorable mention to our error handling macros’ provisions for stealth HIPification.

State Of Affairs

We’ll start with a quick overview of the CUDA SDK Sample code.

The interval arithmetic sample has a typical error handling macro that prints a message to stderr and exits the process if CUDA returns an error:

#define CHECKED_CALL(func) \

do { \

cudaError_t err = (func); \

if (err != cudaSuccess) { \

printf( \

“%s(%d): ERROR: %s returned %s (err#%d)\n”, __FILE__, __LINE__, #func, cudaGetErrorString(err), err); \

exit(EXIT_FAILURE); \

} \

} while (0)The NPP samples have error handling macros of their own:

#define NPP_CHECK_CUDA(S) do {cudaError_t eCUDAResult; \

eCUDAResult = S; \

if (eCUDAResult != cudaSuccess) std::cout << “NPP_CHECK_CUDA - eCUDAResult = “ << eCUDAResult << std::endl; \

NPP_ASSERT(eCUDAResult == cudaSuccess);} while (false)But most samples call a macro checkCudaErrors() that is defined in a variety of places, resulting in code that looks like this fragment from graphConditionalNodes.cu:

checkCudaErrors(cudaGraphAddNode(&bodyNode, bodyGraph, NULL, NULL, 0, ¶ms));

checkCudaErrors(cudaGraphInstantiate(&graphExec, graph, NULL, NULL, 0));

// Initialize device memory and launch the graph

checkCudaErrors(cudaMemset(dPtr, 0, 1)); // Set dPtr to 0

printf(”Host: Launching graph with device memory set to 0\n”);

checkCudaErrors(cudaGraphLaunch(graphExec, 0));

checkCudaErrors(cudaDeviceSynchronize());

// Initialize device memory and launch the graph

checkCudaErrors(cudaMemset(dPtr, 1, 1)); // Set dPtr to 1

printf(”Host: Launching graph with device memory set to 1\n”);

checkCudaErrors(cudaGraphLaunch(graphExec, 0));

checkCudaErrors(cudaDeviceSynchronize());There is little to no need for such verbosity in our error handling.

Unobtrusive Error Handling

If you define a macro cuda that prepends “cuda” to the function being called, your API calls become more succinct with no loss of correctness. Let’s rewrite the series of CUDA calls from graphConditionalNodes.cu:

cuda(GraphAddNode(&bodyNode, bodyGraph, NULL, NULL, 0, ¶ms));

cuda(GraphInstantiate(&graphExec, graph, NULL, NULL, 0));

// Initialize device memory and launch the graph

cuda(Memset(dPtr, 0, 1)); // Set dPtr to 0

printf(”Host: Launching graph with device memory set to 0\n”);

cuda(GraphLaunch(graphExec, 0));

cuda(DeviceSynchronize());

// Initialize device memory and launch the graph

cuda(Memset(dPtr, 1, 1)); // Set dPtr to 1

printf(”Host: Launching graph with device memory set to 1\n”);

cuda(GraphLaunch(graphExec, 0));

cuda(DeviceSynchronize());The refactored code is doing exactly the same thing, but more concisely.

For The CUDA Handbook source code, we don’t handle errors the same way that NVIDIA’s sample code does, which typically is to print an error and exit the process. Instead, we use a goto-based error handling scheme to clean up, if necessary, before returning the error to our caller. The macro looks like this:

#define cuda( fn ) do { \

(status) = (cuda##fn); \

if ( cudaSuccess != (status) ) { \

fprintf( stderr, “CUDA Runtime Failure (line %d of file %s):\n\t” \

“%s returned 0x%x (%s)\n”, \

__LINE__, __FILE__, #fn, status, chGetErrorString(status) ); \

goto Error; \

} \

} while (0)The macro enables long sequences of CUDA calls, some of which may fail, to be invoked concisely and correctly. See for example this series of resource allocations and memory copies in stream1Device.cu:

cuda(Malloc( &dptrOut, N*sizeof(float) ) );

cuda(Memset( dptrOut, 0, N*sizeof(float) ) );

cuda(Malloc( &dptrY, N*sizeof(float) ) );

cuda(Memset( dptrY, 0, N*sizeof(float) ) );

cuda(Malloc( &dptrX, N*sizeof(float) ) );

cuda(Memset( dptrY, 0, N*sizeof(float) ) );

cuda(EventCreate( &evStart ) );

cuda(EventCreate( &evHtoD ) );

cuda(EventCreate( &evKernel ) );

cuda(EventCreate( &evDtoH ) );This code is taking advantage of the timing features in CUDA events to separately measure the host-to-device, kernel execution, and device-to-host runtimes. If we were dedicating three lines of code to the error handling, the code fragment would be much harder to understand.

Stealth HIPification

For those aspiring to port their workloads to HIP, AMD’s rough equivalent to CUDA, we need only modify the macro to prepend “hip” instead of “cuda” to the API call. In the CUDA Handbook source code, this prompted me to split the headers into a chError_cuda.h and chError_hip.h. When using this approach to HIPify, the preprocessor also must be enlisted to transform error codes and constants such as cudaMemcpyHostToDevice. Additionally, our goto-based error handling scheme requires that we explicitly ignore the error codes from functions such as cudaFree() and cudaStreamDestroy(); so the HIP edition of our error handling header file must include preprocessor transformations for those functions, as well.

I wish the itinerant maintainer of the HIPify-perl and HIPify-clang tools would focus instead on a canonical header file to solve this problem in an officially-supported manner; in the meantime, would-be adopters of HIP are left to either use the preprocessor, as I have chosen to do, or use source-to-source translation to preprocess their source files.

Conclusion

Error handling is at once pervasive and prone to pitfalls. CUDA developers can write incorrect code, or code that is doing superfluous error checks, with disturbing ease. I’d encourage anyone maintaining a significant CUDA code base to periodically do an “error handling audit,” and make sure errors are being checked and handled correctly.