This ML Workload Runs 30x Faster w AVX-512

Register-Register Lookups FTW!

Copyright (C) 2025 by Nicholas Wilt. All rights reserved.

When Tim Dettmers et al. published QLoRA (Quantized Low-Rank Adaptation), a memory-efficient method for fine-tuning large language models, they introduced the 4-bit NormalFloat (NF4) representation for quantization. Quoting from their paper, NF4 is “an information theoretically optimal quantization data type for normally distributed data that yields better empirical results than 4-bit Integers and 4-bit Floats.” Section 3 of the paper describes how they arrived at the sixteen (16) values representable by NF4, and Appendix E enumerates them:

constexpr float NF4_LUT[16] = {

-1.0,

-0.6961928009986877,

-0.5250730514526367,

-0.39491748809814453,

-0.28444138169288635,

-0.18477343022823334,

-0.09105003625154495,

0.0,

0.07958029955625534,

0.16093020141124725,

0.24611230194568634,

0.33791524171829224,

0.44070982933044434,

0.5626170039176941,

0.7229568362236023,

1.0

};It is pretty wild to consider that the venerable FP32 data type can be usefully distilled into 4 bits by simply picking 16 (sixteen) of the 4 billion or so values representable by FP32, and picking the closest one; but when you think about it, that is what all FP4 representations do. It’s just a bit jarring to see such arbitrary-seeming values written out to double-precision accuracy.

So, how does one convert to and from this representation? From is easy: a simple lookup into the NF4_LUT table above. What about the other direction (“quantization”), i.e. how to convert a value in the range [-1.0, 1.0] to the closest of the 16 encodings of NF4 enumerated above? The value 0.6, for example, falls between NF4_LUT[13]=0.5626 and NF4_LUT[14]=0.7230 and is closest to NF4_LUT[13]. So the (zero-based) index, and the correct NF4 encoding for 0.6, is 13=0xd.

A brute force implementation would simply go through all 16 entries of the lookup table, compute the distance to the input value, and report the index that was closest. That code looks like this:

int

float_to_NF4_0( const float *base, size_t N, float f )

{

int ret = 0;

float diff = fabsf( base[0]-f );

for ( size_t i = 1; i < 16; i++ ) {

float thisdiff = fabsf( base[i]-f );

if ( thisdiff < diff ) {

diff = thisdiff;

ret = i;

}

}

return ret;

}This algorithm works for any lookup table - obviously you pass NF4_LUT as the first parameter and 16 as the second. (The parameter N is left over from implementations that supported problem sizes other than 16.)

But looking at the NF4_LUT array, and considering the problem from first principles, reasonably would expect that their structure (they are in sorted order) could be exploited somehow. Binary Search comes to mind; and for my part, I was reminded of a trick that I learned from Jon Bentley’s Programming Pearls (1986), a compendium of his popular monthly column that used to run in Communications of the ACM. Bentley’s optimization, intended for fixed-sized arrays, enables the Binary Search probes to be done with steadily decreasing powers of 2, in an elegantly unrolled loop. For NF4, that looks like this:

int

float_to_NF4_1( const float *base, size_t N, float f )

{

int i = 0;

if ( f >= base[i+8] ) i += 8;

if ( f >= base[i+4] ) i += 4;

if ( f >= base[i+2] ) i += 2;

if ( f >= base[i+1] ) i += 1;

return i + ((base[i+1]-f)<(f-base[i]));

}After the Binary Search is complete, 0<=i<15 and the correct return value must be i or i+1. The final return value is computed by incrementing i if the input value is closer to NF4_LUT[i+1]. This replaces the exhaustive search of the brute force algorithm with Binary Search.

Are further improvements possible? I thought you would never ask!

Midpoints FTW

The bitsandbytes implementation of the same quantization algorithm does something similar, but more clever, because it eliminates the need for that final check and conditional increment: this code precomputes the 15 midpoints between the 16 LUT entries, and constructs the 4-bit index by evaluating a decision tree (at most 4 if statements):

inline

unsigned char

dQuantizeNF4_0(float x)

{

// the values for this tree was generated by test_normal_map_tree

// in the file tests/test_functional.py

if(x > 0.03979014977812767f)

if(x > 0.3893125355243683f) // 1

if(x > 0.6427869200706482f) // 11

if(x > 0.8614784181118011f) // 111

return 0b1111;

else

return 0b1110;

else

if(x > 0.5016634166240692f) // 110

return 0b1101;

else

return 0b1100;

else

if(x > 0.2035212516784668f) // 10

if(x > 0.2920137718319893f) // 101

return 0b1011;

else

return 0b1010;

else

if(x > 0.1202552504837513f) // 100

return 0b1001;

else

return 0b1000;

else

if(x > -0.33967943489551544f) // 0

if(x > -0.13791173323988914f) // 01

if(x > -0.045525018125772476f) // 011

return 0b0111;

else

return 0b0110;

else

if(x > -0.23460740596055984f) // 010

return 0b0101;

else

return 0b0100;

else

if(x > -0.6106329262256622f) // 00

if(x > -0.4599952697753906f) // 001

return 0b0011;

else

return 0b0010;

else

if(x > -0.8480964004993439f) // 000

return 0b0001;

else

return 0b0000;

}I don’t know about you, but I find this code as clear as mud. It clarifies things to explicitly create a new lookup table from the midpoints:

constexpr float NF4_LUT_mid[16] = {

0.0f,

NF4_LUT[ 0] + 0.5f * ( NF4_LUT[ 1] - NF4_LUT[ 0] ),

NF4_LUT[ 1] + 0.5f * ( NF4_LUT[ 2] - NF4_LUT[ 1] ),

NF4_LUT[ 2] + 0.5f * ( NF4_LUT[ 3] - NF4_LUT[ 2] ),

NF4_LUT[ 3] + 0.5f * ( NF4_LUT[ 4] - NF4_LUT[ 3] ),

NF4_LUT[ 4] + 0.5f * ( NF4_LUT[ 5] - NF4_LUT[ 4] ),

NF4_LUT[ 5] + 0.5f * ( NF4_LUT[ 6] - NF4_LUT[ 5] ),

NF4_LUT[ 6] + 0.5f * ( NF4_LUT[ 7] - NF4_LUT[ 6] ),

NF4_LUT[ 7] + 0.5f * ( NF4_LUT[ 8] - NF4_LUT[ 7] ),

NF4_LUT[ 8] + 0.5f * ( NF4_LUT[ 9] - NF4_LUT[ 8] ),

NF4_LUT[ 9] + 0.5f * ( NF4_LUT[10] - NF4_LUT[ 9] ),

NF4_LUT[10] + 0.5f * ( NF4_LUT[11] - NF4_LUT[10] ),

NF4_LUT[11] + 0.5f * ( NF4_LUT[12] - NF4_LUT[11] ),

NF4_LUT[12] + 0.5f * ( NF4_LUT[13] - NF4_LUT[12] ),

NF4_LUT[13] + 0.5f * ( NF4_LUT[14] - NF4_LUT[13] ),

NF4_LUT[14] + 0.5f * ( NF4_LUT[15] - NF4_LUT[14] ),

};and rewrite the function, replacing the constants with references to NF4_LUT_mid. The resulting code highlights that we’re building indices one bit at a time:

inline

unsigned char

dQuantizeNF4_1(float x)

{

// the values for this tree was generated by test_normal_map_tree

// in the file tests/test_functional.py

if(x > NF4_LUT_mid[0b1000] )

if(x > NF4_LUT_mid[0b1100]) // 1

if(x > NF4_LUT_mid[0b1110]) // 11

if(x > NF4_LUT_mid[0b1111]) // 111

return 0b1111;

else

return 0b1110;

else

if(x > NF4_LUT_mid[0b1101]) // 110

return 0b1101;

else

return 0b1100;

else

if(x > NF4_LUT_mid[0b1010] ) // 10

if(x > NF4_LUT_mid[0b1011] ) // 101

return 0b1011;

else

return 0b1010;

else

if(x > NF4_LUT_mid[0b1001] ) // 100

return 0b1001;

else

return 0b1000;

else

if(x > NF4_LUT_mid[0b0100]) // 1

if(x > NF4_LUT_mid[0b0110]) // 11

if(x > NF4_LUT_mid[0b0111]) // 111

return 0b0111;

else

return 0b0110;

else

if(x > NF4_LUT_mid[0b0101]) // 110

return 0b0101;

else

return 0b0100;

else

if(x > NF4_LUT_mid[0b0010] ) // 10

if(x > NF4_LUT_mid[0b0011] ) // 101

return 0b0011;

else

return 0b0010;

else

if(x > NF4_LUT_mid[0b0001] ) // 100

return 0b0001;

else

return 0b0000;

}If the pattern doesn’t immediately jump out at you, look at an innermost if statement:

if(x > NF4_LUT_mid[0b1111]) // 111

return 0b1111;

else

return 0b1110;and note that if the condition is met, the bit that was tested is set. The same is true of the outer if statements; they are just further removed from the index construction. All of the statements within a given conditional, set the bits controlled by all of the if statements that led to that code path.

Another way to look at this code: each if statement conditionally OR’s a bit into the final output.

Sound familiar? It should - it is akin to Bentley’s optimized Binary Search!

Enter AVX512

Aside: The bitsandbytes code referenced above is CUDA code, not CPU code; but we were able to lift it verbatim to use in our CPU benchmarks. I am not sure if the nested if statements compile to the best GPU code – I suspect that putting the LUT in shared memory or using warp shuffles would do the same calculation more efficiently – but for now, I want to take a look at how we can combine Bentley’s awesome Binary Search optimization with the VPERMPS instruction from AVX512 to compute 16 of these indices at a time for a humongous speedup.

VPERMPS, which may be accessed via intrinsics such as _mm512_permutexvar_ps(), essentially performs a register-to-register lookup, using the least significant 4 bits of the SIMD lanes in one register to index into another register. On both AVX2 and AVX512, VPERMPS is one of the few lane-crossing instructions that can be brought to full effect across the entire register. (Many AVX2 instructions, in particular, operate separately on the top and bottom halves of the register.)

An AVX-512 implementation of the quantization algorithm may be implemented as follows:

void

float_to_NF4_16( uint32_t *out, const float *base, size_t N, const float *f )

{

__m512i v_i = _mm512_setzero_si512();

__m512 v_f = _mm512_load_ps( f );

const __m512 v_lut = _mm512_loadu_ps( &NF4_LUT_mid[0] );

auto round = [v_lut, &v_i, v_f]( int N2 ) -> void {

__m512i v_i_N2 = _mm512_add_epi32( v_i, _mm512_set1_epi32( N2 ) );

__m512 v_lut_i_N2 = _mm512_permutexvar_ps( v_i_N2, v_lut );

__mmask16 mask_gt = _mm512_cmp_ps_mask( v_f, v_lut_i_N2, _CMP_GE_OS );

v_i = _mm512_mask_add_epi32( v_i, mask_gt, v_i, _mm512_set1_epi32( N2 ) );

};

round( 8 );

round( 4 );

round( 2 );

round( 1 );

_mm512_store_si512( (__m512i *) out, v_i );

}The lambda is used for a DRY (do not repeat yourself) pattern, echoing the unrolled Binary Search from before. It’s as clear as intrinsics-based AVX512 code can be, which is to say, not very. But it is fast as hell! The whole function compiles to about 18 machine instructions, with no loops, just a fallthrough execution constructing 16 output values in parallel:

vmovaps zmm0, zmmword ptr [rcx]

vmovdqa64 zmm1, zmmword ptr [rip + NF4_LUT_mid]

vcmpgeps k1, zmm0, dword ptr [rip + NF4_LUT_mid+32]{1to16}

vpbroadcastd zmm2 {k1} {z}, dword ptr [rip + .LCPI0_0]

vpord zmm3, zmm2, dword ptr [rip + .LCPI0_1]{1to16}

vpermd zmm4, zmm3, zmm1

vcmpleps k1, zmm4, zmm0

vmovdqa32 zmm2 {k1}, zmm3

vpord zmm3, zmm2, dword ptr [rip + .LCPI0_2]{1to16}

vpermd zmm4, zmm3, zmm1

vcmpleps k1, zmm4, zmm0

vmovdqa32 zmm2 {k1}, zmm3

vpord zmm3, zmm2, dword ptr [rip + .LCPI0_3]{1to16}

vpermd zmm1, zmm3, zmm1

vcmpleps k1, zmm1, zmm0

vmovdqa32 zmm2 {k1}, zmm3

vmovdqa64 zmmword ptr [rdi], zmm2

vzeroupper

retOn my old AMD CPU, it is about 30x faster than the fastest scalar implementation, which ironically is the brute force algorithm.

A 30x Speedup

The output from my test program, which is checked into the Parallel Programmer GitHub repository, reads as follows and shows a 29.55x speedup from AVX-512:

gold: 23.64 clocks/iteration

binsearch: 74.22 clocks/iteration

bitsandbytes: 48.63 clocks/iteration

AVX512: 0.80 clocks/iteration(The “clocks” are just RDTSC ticks, which are an apples-to-apples comparison as long as the comparisons are being done on the same CPU and the CPU implements invariant RDTSC. On Linux, you can check for this CPU feature by searching /proc/cpuinfo for constant_tsc.)

A more recent AVX-512 implementation might be even faster. My testing was done on my trusty Ryzen 7 7700X, the first available AVX-512 capable CPU from AMD.

An AVX2 implementation would be more complicated, because although we do have a register-to-register lookup, there are only 8 32-bit lanes, so the LUT does not fit in a single register. So on the one hand, probably you’d have to select between two possible LUT values in each of the 4 rounds of the calculation; on the other, AVX2 implementations tend to have such great microarchitectures that it’d still be very fast. The VPBLEND instruction would be our friend, maybe for some other day.

On Intrinsics v Hand-Coding

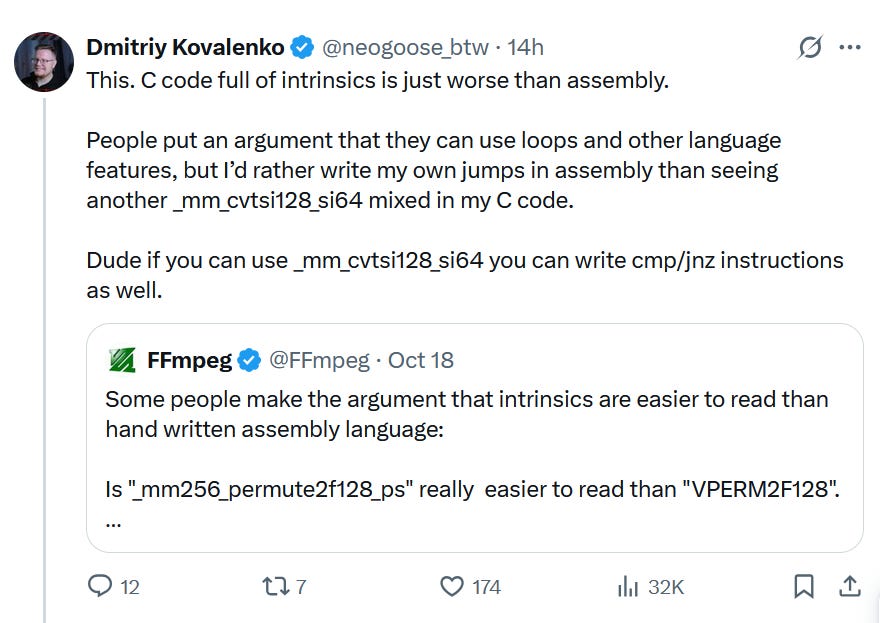

One final word: just yesterday, a Twitter commentator intimated that anyone using intrinsics could instead be hand-coding in assembly language.

I used to belong in that camp, sort of; though in my defense 1) I was writing image processing code where the C programming language’s compulsion to promote short integers to the machine’s natural word length was constantly inhibiting my efforts at SWAR, and 2) I was competing with 1980s-era compiler technology, before SIMD was widely available, on architectures that hadn’t benefited from decades of hardware/software codesign between compilers and hardware ISAs. In the intervening years, I’ve grown to appreciate compilers that will do register allocation and instruction scheduling on my behalf.

Apropos to the topic at hand, the exercise I invite you to undertake – hypothetically, if not literally – is to first, look over the generated code and see if you could improve upon it by hand-coding.

Next, imagine how we transform this function into one that generates the actual intended output of 4 bits per element. Currently it computes 16x32b elements. To compress these further into 4b elements invites a 4x unroll of this function that demotes and interleaves 32b->16b->8b->4b, which would unlock rich ILP opportunities. It might be a day’s work with intrinsics, but it would be many times more work to hand-code, and unlikely to be faster.